AI Workflow

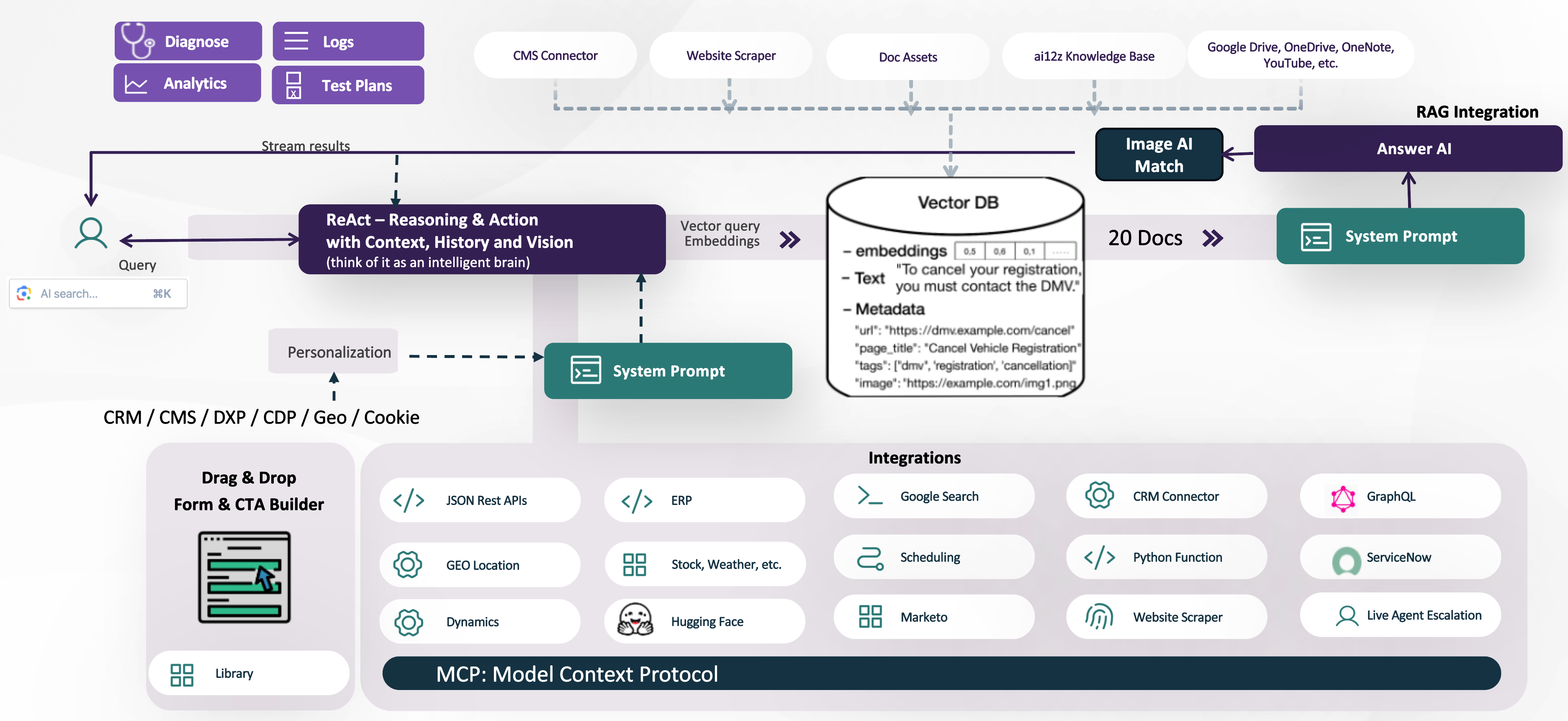

AI Workflow: ReAct Agentic Architecture, Integrations, and Forms

A Modern Approach: ReAct – Reasoning, Action, and Real Results

The ai12z platform is built on a next-generation ReAct agentic workflow—centered on a powerful Reasoning Engine that does more than just answer questions. It plans, takes action, and orchestrates the entire customer journey, drawing from your content, your systems, and real-time context.

When a user engages (with a question, search, or image), ai12z’s Reasoning Engine (LLM) receives a system prompt packed with:

- A dynamic list of available integrations, tools, and forms

- The full history and context of the conversation

- Relevant metadata (language, geo, URL, user attributes)

- Business goals and any constraints set in the prompt

The magic: The Reasoning Engine creates a plan—which may involve calling multiple integrations or forms in sequence, asking clarifying questions, or combining live and historical data—to achieve the user’s goal. Plans can adapt in real-time, even as the conversation evolves.

Integrations: Connecting Content, Data, and Actions

ai12z brings your ecosystem to life—connecting with CMS, CRM, DXP, inventory, scheduling, and virtually any third-party service via:

- MCP Protocol (Model Context Protocol)

- GraphQL & REST APIs

- Out-of-the-box connectors Email, SMS, Google Maps, Stock, ect..

- Answer AI (RAG) RAG is the default integration, ReAct understand if the query should call an integration, where the fallback is Answer AI

Your assistant doesn’t just answer questions—it books, updates, checks inventory, pulls reports, and more.

RAG: Retrieval-Augmented Generation as Just Another Agent

In ai12z, RAG (Retrieval-Augmented Generation) is not the end-game—it’s just one of many tools the Reasoning Engine can deploy.

- If no integration has the answer, the ReAct workflow hands off to RAG to find relevant info from your connected docs, sites, assets, and knowledge bases.

- RAG can work in parallel with other agents—think side-by-side product comparisons, collecting answers from multiple sources, or combining live API data with your proprietary knowledge.

Result: Every query gets the best, most relevant response—no dead ends, no rigid scripts.

Why ReAct and ai12z Beat Traditional Bot Frameworks

- Traditional bots rely on scripts and intent trees: rigid, high-maintenance, and easily outgrown.

- ai12z Agents are adaptive: using live context, continuous learning, and dynamic integration to deliver relevant, brand-safe answers—every time.

Key Differences:

- Adaptive, real-time conversations: Not tied to scripts, responds naturally and intelligently

- Integrated with all your content: No manual retraining for new info

- Seamless actions: Can complete tasks, not just answer

- Low-code, fast deployment: Business users and devs can launch and optimize in days

Deep Dive: How it Works

-

User Input: Users ask a question (text, voice, or image). The system can process text, understand uploaded images, and personalize responses using real-time context (location, language, etc).

-

Planning & Orchestration: The Reasoning Engine reviews available tools, integrations, and forms, and creates a step-by-step plan—possibly involving multiple agents (including RAG), API calls, and custom business logic.

-

Search & Retrieval: For knowledge-based questions, the system queries the Vector Database (storing text, embeddings, and metadata) to retrieve and rank the most relevant info.

Inside the Vector DB:

- Embeddings: Numeric vector representations for rapid semantic search

- Text: Source snippets

- Metadata: URL, page title, tags, images, and more

-

Answer Generation: The results from RAG and other integrations are used to build a final system prompt, which the Answer AI LLM uses to craft a complete, context-aware response.

-

Rich Interactions & Forms: Output isn’t just a text bubble—ai12z supports branded forms, CTAs, and dynamic UI controls (validation fields, date pickers, sliders, file/image upload, and more), making it easy to collect info and guide users to take action.

-

Continuous Optimization: Built-in analytics, logs, and diagnostics empower you to optimize performance, tune prompts, and ensure the assistant always puts your brand’s best foot forward.

Dynamic System Prompts & Tokens

To maximize relevance, ai12z supports dynamic tokens in prompts, allowing the assistant to reference:

{query}– The latest user question{vector-query}– Context-optimized version of the question{history}– Past conversation threads{language},{geo},{origin}– Key user/session data{attributes}– Custom attributes for deeper personalization, using JS to include Geo info, cookie data, ect..{org_name},{purpose}– For tailoring brand tone and objectives- ...and more including the context of the web page the controls are on

In Summary

ai12z’s ReAct agentic workflow is a step change from yesterday’s chatbots. It orchestrates, reasons, and acts—drawing on your content, your data, and your goals. The result? A branded digital experience that’s as helpful and dynamic as your best team member.

Ready to power up your digital journey? See how ai12z can transform your customer experience—let’s get started!