Custom Agent - Python

Overview and Purpose and Demo

Why Use Python Functions in Your Custom Agents?

Integrating Python functions into your Custom Agents provides a flexible and powerful way to transform, enrich, and personalize the data returned by external endpoints before it’s presented to the user. Since Python has direct access to both the source data (from your chosen REST or GraphQL endpoint) and the LLM parameters (such as user inputs or contextual variables), it enables sophisticated manipulations that go far beyond simple data retrieval.

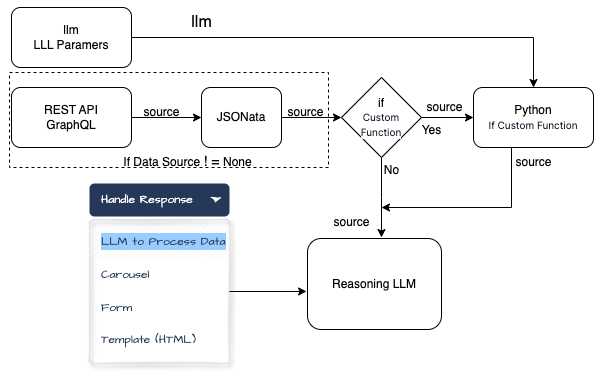

Block diagram

Below are eight examples illustrating the kinds of transformations and enhancements you can implement with Python functions:

-

Filtering & Extraction:

Scenario: You’ve fetched a large dataset of user activities from a GraphQL endpoint.

Action: Use Python to filter the dataset based on a specific user input parameter (e.g.,"task_type") and return only the entries that match that category.

Outcome: The LLM receives a concise, relevant subset of data that directly answers the user’s query. -

Data Aggregation & Summarization:

Scenario: A REST API returns raw sales numbers for multiple products.

Action: Python can sum total sales, compute averages, or calculate growth percentages before returning a single, informative result.

Outcome: Instead of merely listing raw figures, the LLM can provide a meaningful summary—e.g., “Total sales increased by 10% this quarter.” -

Unit Conversion & Formatting:

Scenario: The user requests temperature data from a weather API.

Action: Convert temperatures from Kelvin to Celsius or Fahrenheit using Python. Adjust number formatting, rounding decimals, or appending units.

Outcome: The LLM can present neatly formatted, user-friendly metrics tailored to the user’s preferred units. -

Data Merging & Contextualization:

Scenario: A user asks for detailed product specs. One endpoint returns product details, another returns shipping estimates, and the user input (llm["userLocation"]) provides a location.

Action: Python merges and correlates these disparate data sources, filters shipping data based on the user’s location, and outputs a single integrated result.

Outcome: The LLM can describe both product features and estimated delivery times in one coherent answer. -

Calculating Derived Metrics:

Scenario: A fitness tracking API returns daily step counts for a user. The user asks, “How many steps did I average last week?”

Action: Python computes the weekly average from the returned daily totals and returns that number as the new source.

Outcome: The LLM gives a direct answer: “You averaged about 8,500 steps per day last week.” -

Data Cleansing & Validation:

Scenario: The endpoint returns user-generated content containing extraneous characters, poorly formatted text, or missing fields.

Action: Python cleans the data—removing unwanted characters, correcting formatting, handling missing values—and outputs a standardized dataset.

Outcome: The LLM can provide a professional, polished response, free from messy data artifacts. -

Enriching Responses With External Logic:

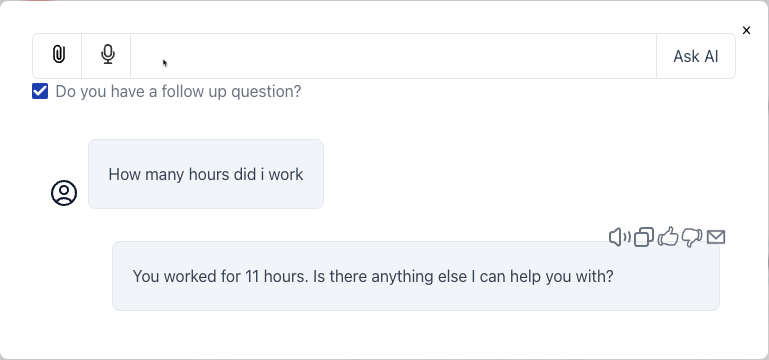

Scenario: The user provides a “userDailyTask” parameter asking how many hours they spent on “coding.” The API returns a table of tasks and hours.

Action: Python locates the “coding” entry, retrieves the hours, and inserts this information into a friendly sentence like “You spent 4 hours on coding today.”

Outcome: The LLM presents a refined, human-readable response that directly addresses the user’s query. -

Conditional Formatting & Tiered Responses:

Scenario: The user retrieves their bank transactions via a REST API and wants a summary.

Action: Python checks if certain thresholds are met—e.g., if spending exceeds a certain limit—and adds warnings or tips. For example, if spending is high, add a message suggesting budgeting advice.

Outcome: The LLM returns a dynamic, context-aware answer: “You spent $2,300 this month, which is above your usual budget. Consider reviewing your spending habits.”

Parameters

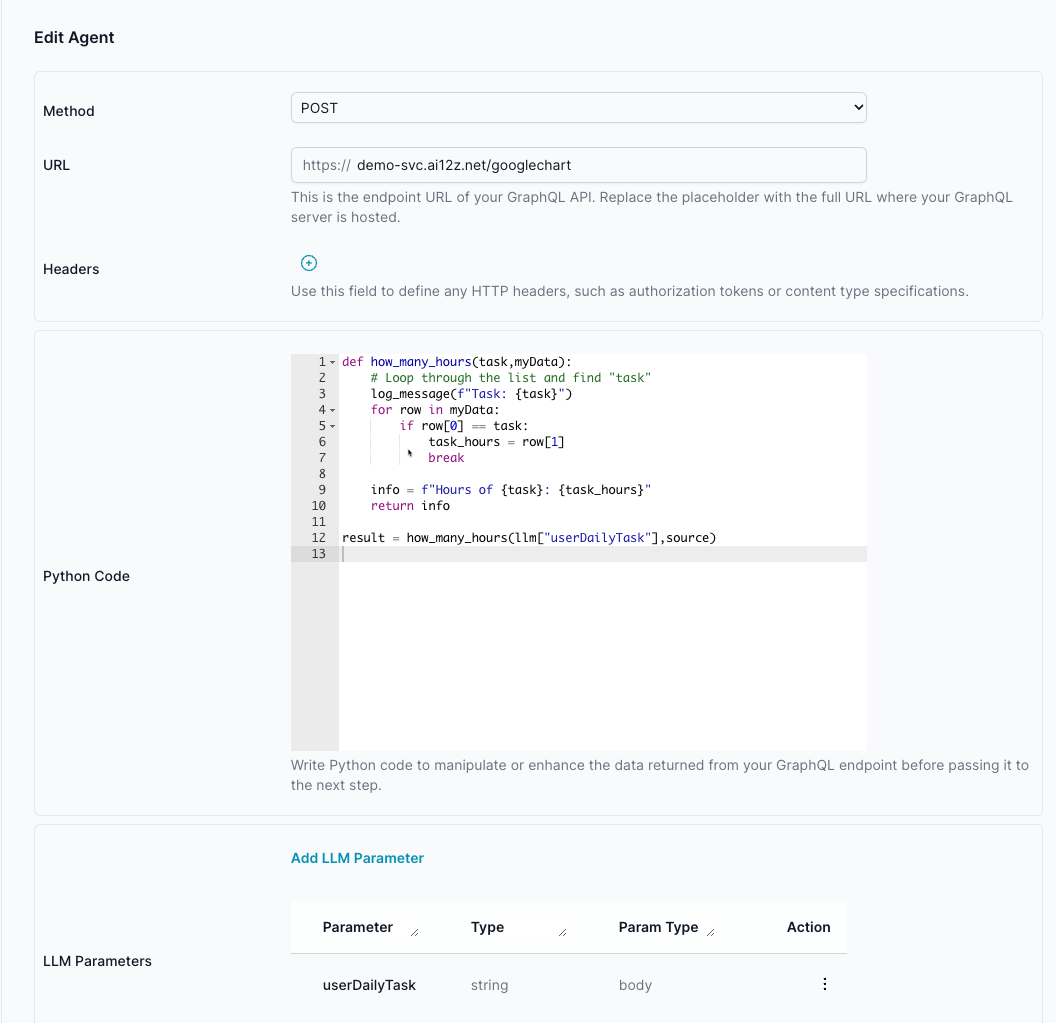

See the Edit Parameter dialog below:

In the Edit Parameter dialog it will define what will show up in this Edit Agent dialog.

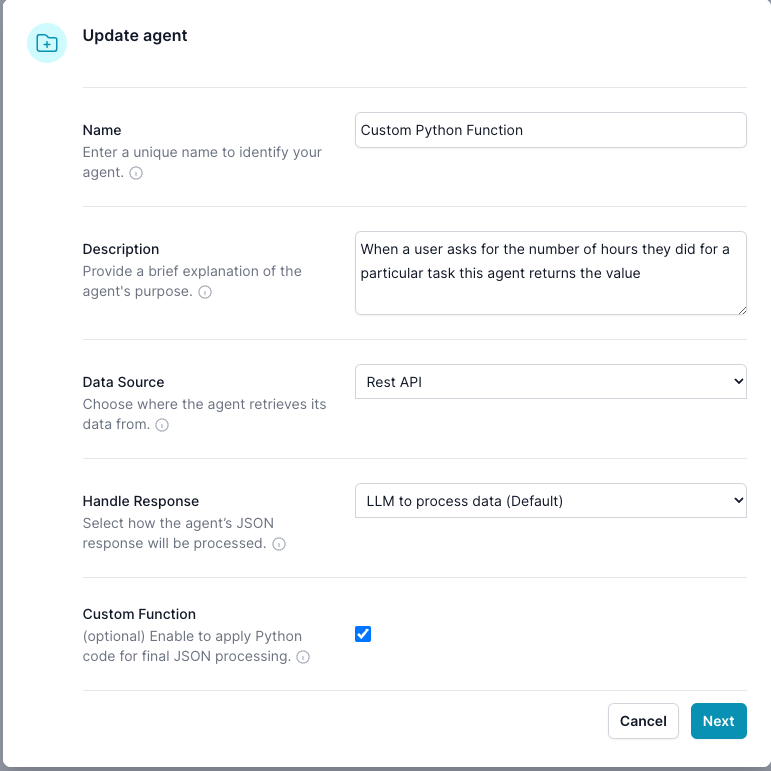

For this example, we set the Handle Response -> LLM to process data (Defautl), and Source -> Rest API, Enable Custom Function -> true

Edit Agent dialog

The endpoint returns this structured data

[

["Task", "Hours per Day"],

["Work", 11],

["Eat", 2],

["Commute", 2],

["Watch TV", 2],

["Sleep", 7]

]

-

Python receives two main objects:

llm: Contains LLM parameters, includingllm["llm_query"], it is always passed to the agent.source: Contains the processed data from your chosen data source (if any), potentially post processed by JSONata, and potentially processed by Python.

The Python code

def how_many_hours(task,myData): # Loop through the list and find "task"

log_message(f"Task: {task}")

for row in myData:

if row[0] == task:

task_hours = row[1]

break

info = f"Hours of {task}: {task_hours}"

return info

result = how_many_hours(llm["userDailyTask"],source)

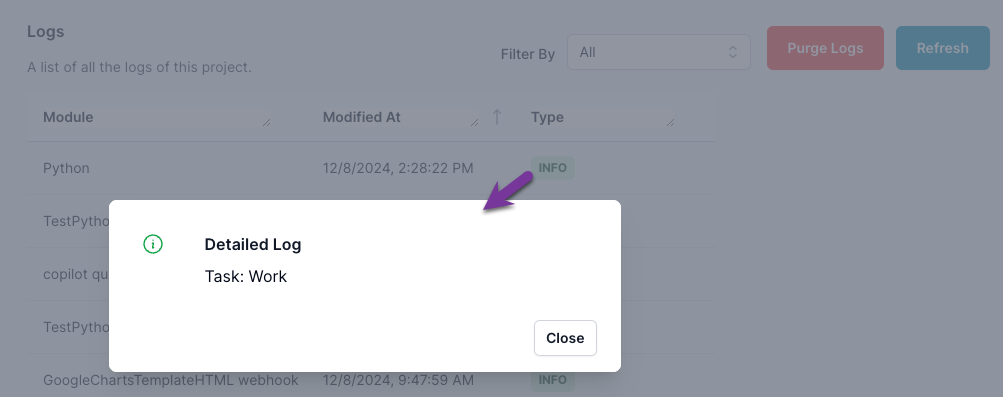

Output

Note this is restricted python, it allows you to do Safe things in python.

For debugging log_message writes to the log, so you can review results

Always return you data to result, this will replace the source data, that is sent to the LLM. If you need the source to go the LLM, then include it in result

Python has the object llm and souce available to it

You need to return the results to the variable results, this will update the souce with this data.