Answer AI Prompt Management

Overview

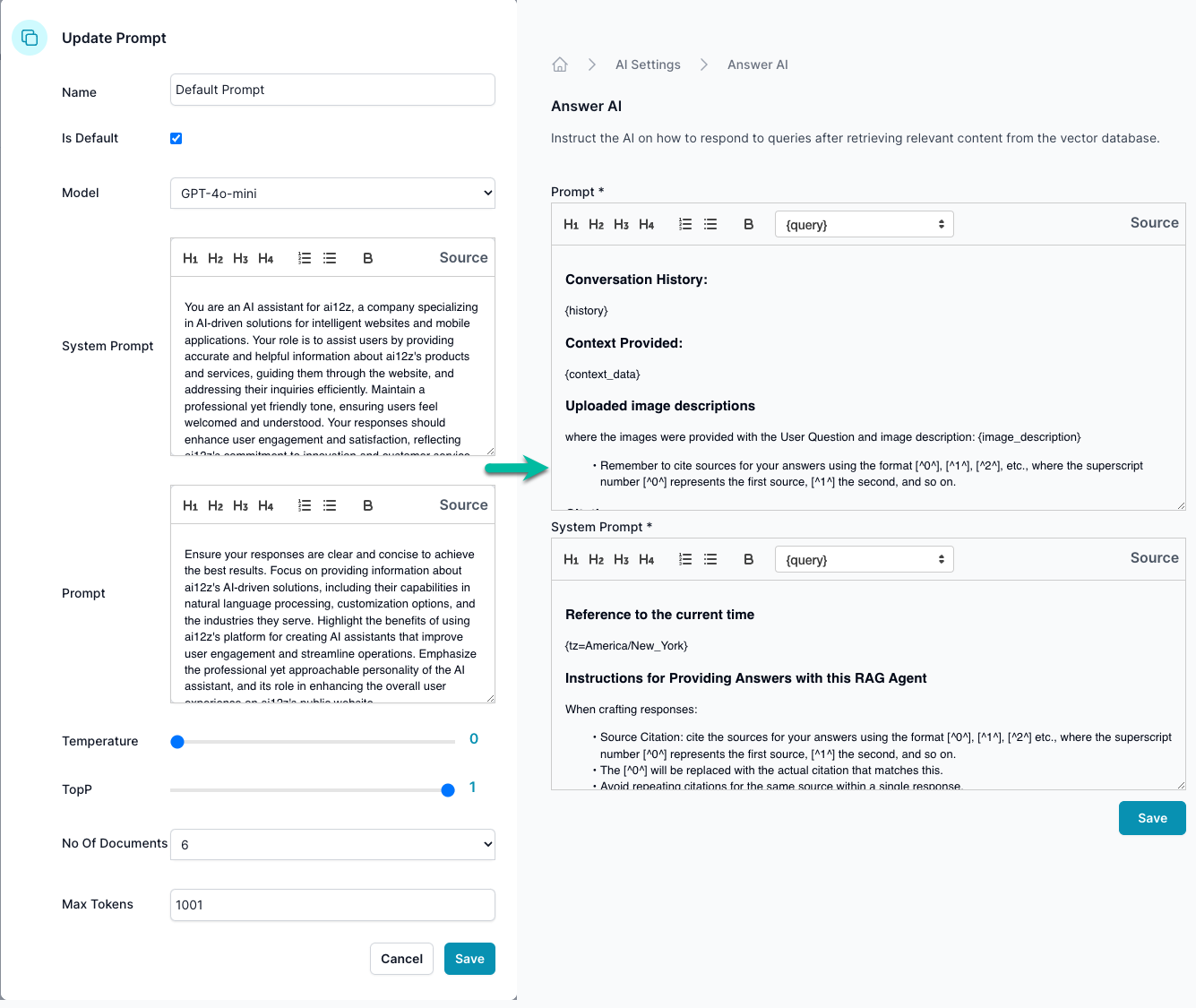

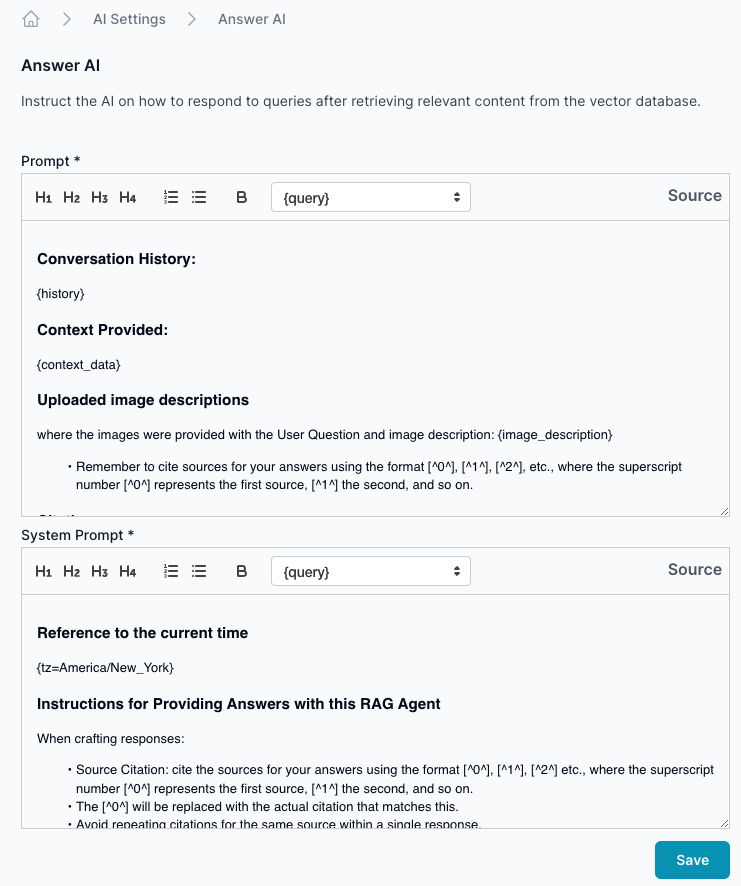

This documentation guides managing and using prompts for Answer AI LLM. Prompts are structured inputs that guide the AI in generating responses to user queries. They include a combination of the prompts from the AI Settings -> Answer AI -> Prompts section and the Promps -> Prompts section.

Dynamic Tokens

Dynamic tokens are placeholders within a prompt that are replaced with dynamic content when a prompt is processed. Here is a list of Dynamic tokens you can use:

{query}: Inserts the user's question.{vector-query}: Inserts the user's question.{history}: Inserts the conversation history.{title}: Inserts the title of the page where the search is conducted.{origin}: Inserts the URL of the page.{language}: Inserts the language of the page.{referrer}: Inserts the referring URL that directed the user to the current page.{attributes}: Allows insertion of additional; javascript would add it to the search control.{org_name}: Inserts the name of the organization for which the copilot is configured.{purpose}: Inserts the stated purpose of the AI bot.{org_url}: Inserts the domain URL of the organization the bot is configured for.{context_data}: Used by Answer AI output from the vector db{tz=America/New_York}: Timezone creates the text of what is the time and date for the LLM to use{image_upload_description}: Image descriptions of images uploaded by the site visitor into the search box processed by vision AI

Prompt Structure

The Answer AI prompts are constructed using two UX components: One is less technical and describes the purpose and tone of the bot. You find this in the Project management left menu called Prompts. Note that AI is used to create the less technical prompt based on organization information. The second is in the AI Settings -> Answer AI, which requires more technical experience to edit. Note that the LLM sees the combination of the 2 Prompts and 2 System prompts.

Combining Base and Project Prompts

When a prompt is activated, the AI Settings -> Answer AI prompt and project prompt are combined to form a complete instruction set for the AI. This combined prompt may include any of the tokens listed above, which will be replaced with actual data once the prompt is used.

Editing Prompts

When editing a prompt, consider the following guidelines:

- Keep the instructions clear and concise so the AI can understand them.

- Review the combined prompt to ensure it makes sense once the base and project prompts merge.

Best Practices

- Test prompts to see how the AI interprets them with different types of user queries.

- Update prompts regularly to align with project goals or organization strategies changes.

- Monitor the AI's responses to ensure they meet the expected quality and adjust the prompts accordingly.

Saving Changes

After making your changes:

- Review the prompt to ensure accuracy and completeness.

- Click the "Save" button to apply the changes.

- Test the updated prompt with a few queries to ensure it functions as expected.

Conclusion

Managing prompts effectively is crucial for the optimal performance of your AI interface. By following the guidelines outlined in this document, you can ensure that your AI provides relevant, accurate responses and in line with your organization's objectives.